Prof. Dr. Mario Fritz

Faculty

CISPA Helmholtz Center for Information Security

Professor

Saarland University

Fellow

European Laboratory for Learning and Intelligent Systems (ELLIS)

We are looking for PhD students and Post-Docs! Please get in touch.

My group is working on Trustworthy Information Processing with a focus on the intersection of AI & Machine Learning with Security & Privacy.

Recent projects and initiatives related to trustworthy AI/ML, health, privacy:

- Coordinator and PI: European Lighthouse on Secure and Safe AI (ELSA)

- Coordinator and PI: PriSyn: Representative, synthetic health data with strong privacy guarantees (BMBF)

- PI: AIgency “Opportunities and Risks of generative AI in Cybersecurity” (BMBF)

- PI: PrivateAIM – sichere verteilte Auswertung medizinischer Daten (Medizin Informatik Initiative)

- Leading Scientist: Helmholtz Medical Security, Privacy, and AI Research Center (HMSP)

- Coordinator and PI: PriSyn: Representative, synthetic health data with strong privacy guarantees

- Coordinator and PI: ImageTox: Automated image-based detection of early toxicity events in zebrafish larvae

- PI: Integrated Early Warning System for Local Recognition, Prevention, and Control for Epidemic Outbreaks (LOKI)

- Partner-PI: The German Human Genome-Phenome Archive (GHGA)

- Coordinator and PI: Trustworthy Federated Data Analytics Project (TFDA)

- Coordinator and PI: Protecting Genetic Data with Synthetic Cohorts from Deep Generative Models (PRO-GENE-GEN)

- Member of working group in “Forum Gesundheit” of BMBF: “AG Nutzbarmachung digitaler Daten für KI-Entwicklungen in der Gesundheitsforschung”

Recent work on LLMs, DeepFake/misinformation detection, attribution, and responsible disclosure:

- NAACL-Findings’24: PoLLMgraph: Unraveling Hallucinations in Large Language Models via State Transition Dynamics

- NAACL-Findings’24: SimSCOOD: Systematic Analysis of Out-of-Distribution Generalization in Fine-tuned Source Code Models

- ICLR-SET’24: Can LLMs Separate Instructions From Data? And What Do We Even Mean By That?

- ICLR-LLMAgents’24: LLM-Deliberation: Evaluating LLMs with Interactive Multi-Agent Negotiation Games

- ArXiv’24: LLM Task Interference: An Initial Study on the Impact of Task-Switch in Conversational History

- SATML’24: CodeLMSec Benchmark: Systematically Evaluating and Finding Security Vulnerabilities in Black-Box Code Language Models

- ArXiv’24: Exploring Value Biases: How LLMs Deviate Towards the Ideal

- BlackHat’23 Compromising LLMs: The Advent of AI Malware

- AISec’23: Not what you’ve signed up for: Compromising Real-World LLM-Integrated Applications with Indirect Prompt Injection

- Usenix’23: UnGANable: Defending Against GAN-based Face Manipulation

- Usenix’23: Fact-Saboteurs: A Taxonomy of Evidence Manipulation Attacks against Fact-Verification Systems

- CVPR’22: Open-Domain, Content-based, Multi-modal Fact-checking of Out-of-Context Images via Online Resources

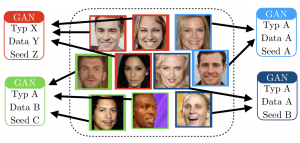

- ICLR’22: Responsible Disclosure of Generative Models Using Scalable Fingerprinting

- ICCV’21: Artificial Fingerprinting for Generative Models: Rooting Deepfake Attribution in Training Data

- S&P’21: Adversarial Watermarking Transformer: Towards Tracing Text Provenance with Data Hiding

- IJCAI’21: Beyond the Spectrum: Detecting Deepfakes via Re-Synthesis

- CVPR’21: Hijack-GAN: Unintended-Use of Pretrained, Black-Box GANs

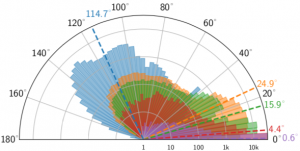

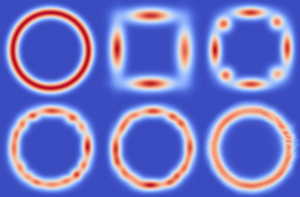

- ICCV’19: Attributing Fake Images to GANs: Learning and Analyzing GAN Fingerprints

Most recent work on ArXiv:

- LLM-Deliberation: Evaluating LLMs with Interactive Multi-Agent Negotiation Games

- More than you’ve asked for: A Comprehensive Analysis of Novel Prompt Injection Threats to Application-Integrated Large Language Models

- A Unified View of Differentially Private Deep Generative Modeling

- Data Forensics in Diffusion Models: A Systematic Analysis of Membership Privacy

- Systematically Finding Security Vulnerabilities in Black-Box Code Generation Models

- Fed-GLOSS-DP: Federated, Global Learning using Synthetic Sets with Record Level Differential Privacy

- Holistically Explainable Vision Transformers

- SimSCOOD: Systematic Analysis of Out-of-Distribution Behavior of Source Code Models

- Availability Attacks Against Neural Network Certifiers Based on Backdoors

News, talks, events:

- Keynote at AISec’23 on “Trustworthy AI and A Cybersecurity Perspective on Large Language Models”

- Panelist on “AI for Cybersecurity and Adversarial AI” at EU AI Alliance Assembly

- Invited talk at ICCV’23 workshop BRAVO: roBustness and Reliability of Autonomous Vehicles in the Open-world

- Invited talk at ICCV’23 Workshop on DeepFake Analysis and Detection

- Invited talk at ICCV’23 Workshop on Out Of Distribution Generalization in Computer Vision

- Lecturer at ELLIS Summer School on Large-Scale AI for Research and Industry

- Talk at Deutscher EDV Gerichtstag

- Talk at AI, Neuroscience and Hardware: From Neural to Artificial Systems and Back Again

- Scientific Advisory Board: Bosch AIShield

- Steering Board: Helmholtz.AI

- Recent program committees: ICML’21, NeurIPS’21, S&P’22, EuroS&P’22, CVPR’22 (AC); CCS’22

- Runner-up Inria/CNIL Privacy Protection Prize 2020

S&P’20 paper: “Automatically Detecting Bystanders in Photos to Reduce Privacy Risks” - Co-Organizers of ICLR’21 Workshop on “Synthetic Data Generation – Quality, Privacy, Bias”

- Co-Organizers of CVPR’21 Workshop on “QuoVadis: Interdisciplinary, Socio-Technical Workshop on the Future of Computer Vision and Pattern Recognition (QuoVadis-CVPR)”

- Co-Organizers of CVPR’21 Workshop on “Causality in Vision”

- Founding member of Saarbrücken Artificial Intelligence & Machine Learning (SAM) unit of the European Laboratory of Learning and Intelligent Systems (ELLIS)

- Lecturer at Digital CISPA Summer School 2020

- Co-Organizer of Third International Workshop on The Bright and Dark Sides of Computer Vision: Challenges and Opportunities for Privacy and Security (CV-COPS) at ECCV 2020

- Co-Organizer: 4. ACM Symposium on Computer Science in Cars: Future Challenges in Artificial Intelligence & Security for Autonomous Vehicles CSCS’20

- Keynote at Workshop Machine Learning for Cybersecurity, ECMLPKDD’19

- Talk at Cyber Defense Campus (CYD) Conference on Artificial Intelligence in Defence and Security

- Co-Organizer of Second International Workshop on The Bright and Dark Sides of Computer Vision: Challenges and Opportunities for Privacy and Security (CV-COPS) at CVPR 2019

- Co-Organizer: 3. ACM Symposium on Computer Science in Cars: Future Challenges in Artificial Intelligence & Security for Autonomous Vehicles CSCS’19

- Leading scientist at new Helmholtz Medical Security and Privacy Research Center

- Member of ACM Technical Policy Committee Europe

- Mateusz Malinowski received the DAGM MVTec dissertation award as well as the Dr.-Eduard-Martin award for his PhD

- Associate Editor for IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)

2020

Proceedings Articles

Prediction Poisoning: Utility-Constrained Defenses Against Model Stealing Attacks Proceedings Article

In: International Conference on Representation Learning (ICLR), 2020.

Technical Reports

InfoScrub: Towards Attribute Privacy by Targeted Obfuscation Technical Report

arXiv:2005.10329 , 2020.

Workshops

SampleFix: Learning to Correct Programs by Sampling Diverse Fixes Workshop

NeurIPS Workshop on Computer-Assisted Programming, 2020.

2019

Journal Articles

MPIIGaze: Real-World Dataset and Deep Appearance-Based Gaze Estimation Journal Article

In: Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2019.

Book Sections

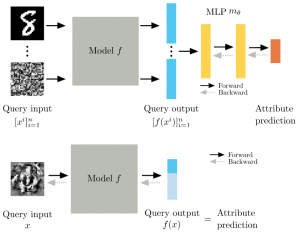

Towards reverse-engineering black-box neural networks Book Section

In: Explainable AI: Interpreting, Explaining and Visualizing Deep Learning, 2019.

Proceedings Articles

Attributing Fake Images to GANs: Learning and Analyzing GAN Fingerprints Proceedings Article

In: International Conference on Computer Vision (ICCV), 2019.

Deep Appearance Maps Proceedings Article

In: International Conference on Computer Vision (ICCV), 2019.

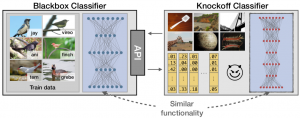

Knockoff Nets: Stealing Functionality of Black-Box Models Proceedings Article

In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

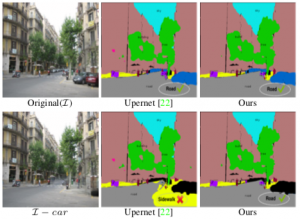

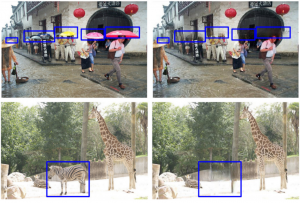

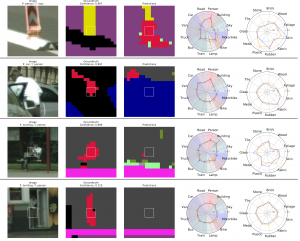

Not Using the Car to See the Sidewalk: Quantifying and Controlling the Effects of Context in Classification and Segmentation Proceedings Article

In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

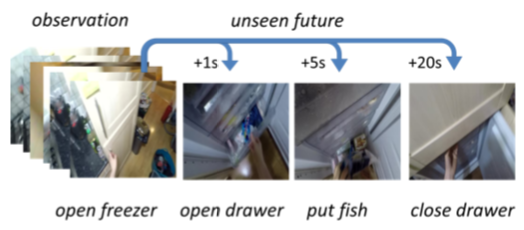

Time-Conditioned Action Anticipation in One Shot Proceedings Article

In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

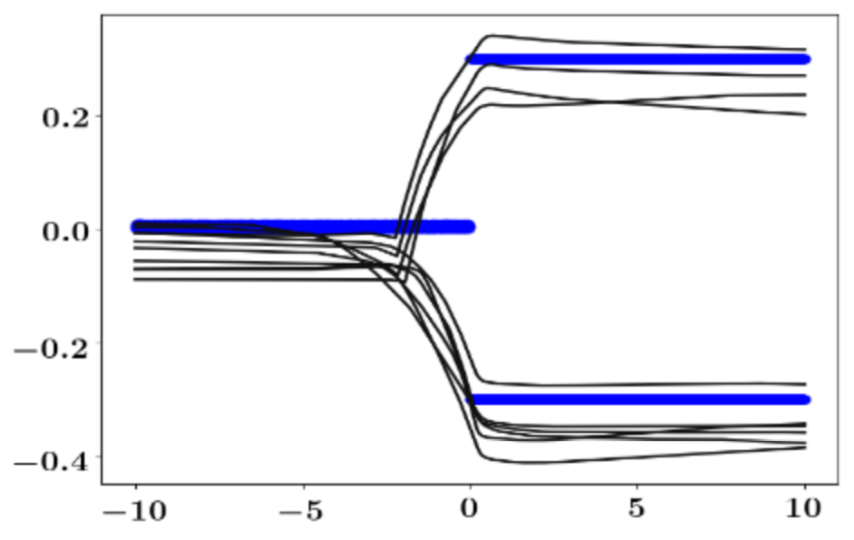

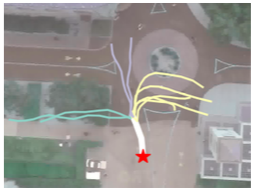

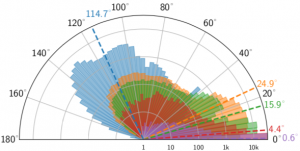

Bayesian Prediction of Future Street Scenes using Synthetic Likelihoods Proceedings Article

In: International Conference on Representation Learning (ICLR), 2019.

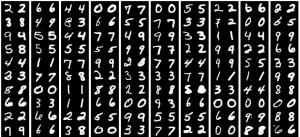

ML-Leaks: Model and Data Independent Membership Inference Attacks and Defenses on Machine Learning Models Proceedings Article

In: Annual Network and Distributed System Security Symposium (NDSS), 2019.

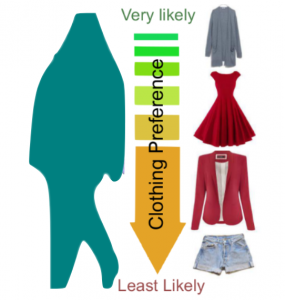

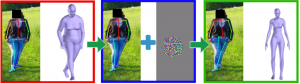

Fashion is Taking Shape: Understanding Clothing Preference Based on Body Shape From Online Sources Proceedings Article

In: IEEE Winter Conference on Applications of Computer Vision (WACV), 2019.

Technical Reports

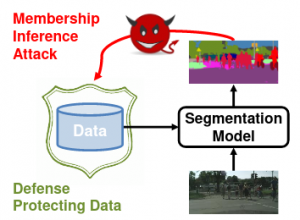

Segmentations-Leak: Membership Inference Attacks and Defenses in Semantic Image Segmentation Technical Report

arXiv:1912.09685, 2019.

"Best-of-Many-Samples" Distribution Matching Technical Report

arXiv:1909.12598, 2019.

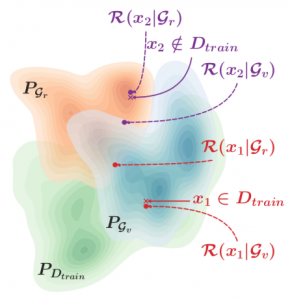

GAN-Leaks: A Taxonomy of Membership Inference Attacks against GANs Technical Report

arXiv:1909.03935, 2019.

WhiteNet: Phishing Website Detection by Visual Whitelists Technical Report

arXiv:1909.00300, 2019.

Conditional Flow Variational Autoencoders for Structured Sequence Prediction Technical Report

arXiv:1908.09008, 2019.

Interpretability Beyond Classification Output: Semantic Bottleneck Networks Technical Report

arXiv:1907.10882 , 2019.

Prediction Poisoning: Utility-Constrained Defenses Against Model Stealing Attacks Technical Report

2019.

SampleFix: Learning to Correct Programs by Sampling Diverse Fixes Technical Report

arXiv:1906.10502, 2019.

Shape Evasion: Preventing Body Shape Inference of Multi-Stage Approaches Technical Report

arXiv:1905.11503 , 2019.

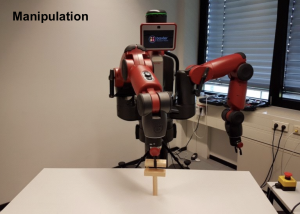

Learning Manipulation under Physics Constraints with Visual Perception Technical Report

2019.

Updates-Leak: Data Set Inference and Reconstruction Attacks in Online Learning Technical Report

2019.

Workshops

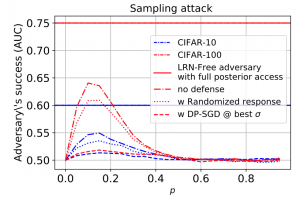

Differential Privacy Defenses and Sampling Attacks for Membership Inference Workshop

NeurIPS Workshop on Privacy in Machine Learning (PRIML), 2019.

Updates-Leak: Data Set Inference and Reconstruction Attacks in Online Learning Workshop

Hot Topics in Privacy Enhancing Technologies (HotPETs), 2019.

Understanding and Recognizing Bystanders in Images for Privacy Protection Workshop

Privacy, Usability, and Transparency (PUT) @ PETs, 2019.